Exploring the Possibilities of AI in Music Production

Today, I want to dive into the world of artificial intelligence and its burgeoning role in music production.

Greetings, fellow mad scientist music producers! Today, I want to dive into the world of artificial intelligence and its burgeoning role in music production. I have been experimenting with various AI tools for music, and I am amazed at the potential they offer. In this blog post, I will take you through my process of using AI to create a Studio 54 inspired disco house track. Let's get started!

Using AI To Generate Music From Text Prompts:

I have always enjoyed digging for obscure and unique samples to use in my productions. I have spent hundreds of hours flicking through forgotten vinyls in record stores or trawling for YouTube videos with little to no plays from which to sample audio. With the rapid progress of AI music generation tools, a whole new world of possibilities has emerged for finding quirky, one of a kind samples.

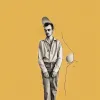

One of my favourite tools is Musicfy.lol, which has a feature that allows me to input a simple text prompt and generate music based on it. For this track, I started out with the prompt “Studio 54 Disco, Wurlitzer, New York” After generating the first sample, I was impressed with the drum sounds and so decided to download the file. It's worth noting that the generation featured more than just the drums I liked, and so I needed to find a way to remove the rest.

Splitting Generations Into Stems:

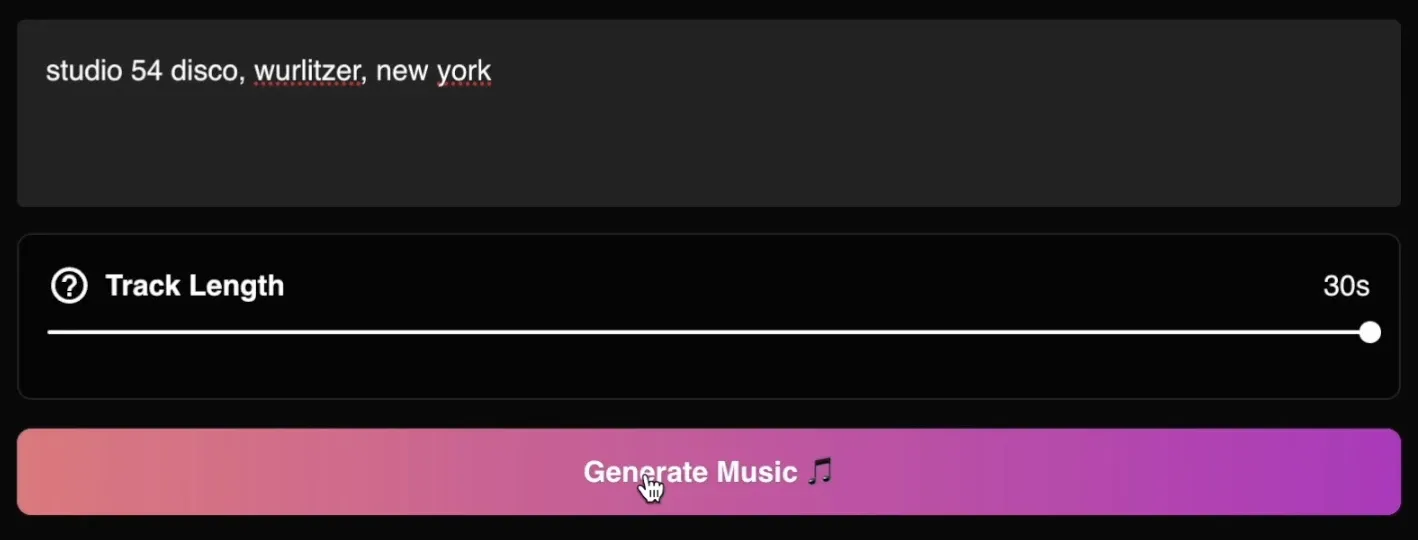

To further manipulate the generated sample, I loaded the file into Hit & Mix's RipX. This mind-blowing tool allows me to separate the audio file into different stems such as voice, bass, instruments, and drums. I found that by isolating the drums and percussion layers, I was able to isolate a simple drum pattern from the busy sample that would serve as the base for my track. I exported the stems and loaded them into Ableton Live, my preferred DAW for warping and audio manipulation.

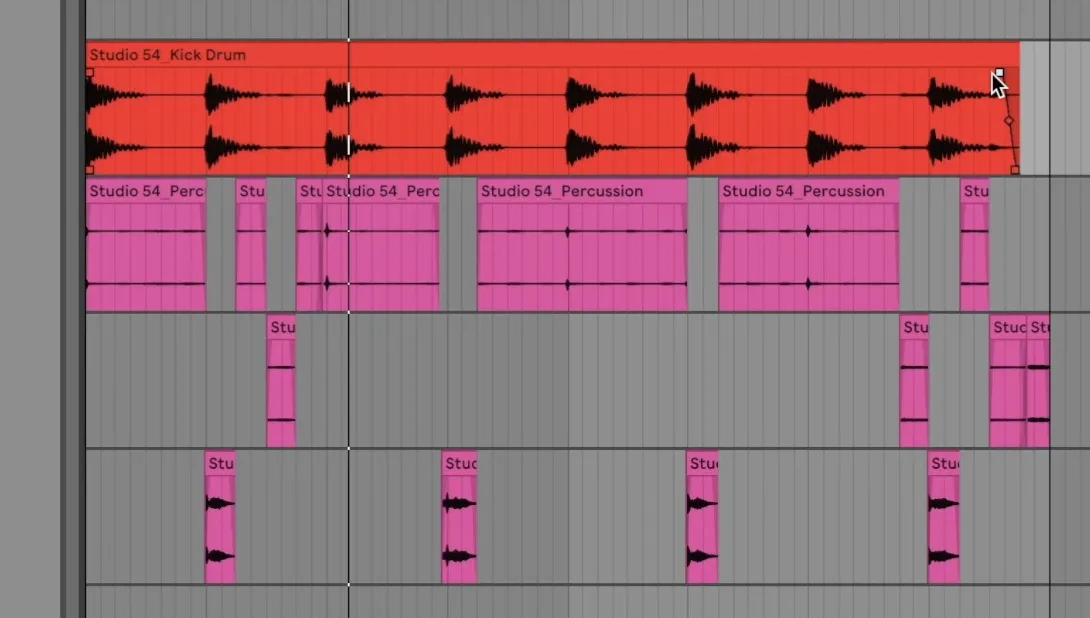

Arranging The Drums:

In Ableton, I then edited the stems and started arranging the track. I focused on tightening up the timing and creating a loop that captured the essence of the Studio 54 disco sound. I took the single audio file and split its components onto separate layers so as to have an easier time mixing and processing the sounds. I sliced the “clap/snare” out onto its own layer, as well as the “hats” and “percussion”. While this approach may seem deceptively simple, you end up with a surprising amount of control over the single sample. By layering multiple generated and isolated drum loops, and making subtle adjustments to timing and layout, I achieved a rather fat and groovy disco drum pattern reminiscent of much of the music of Studio 54.

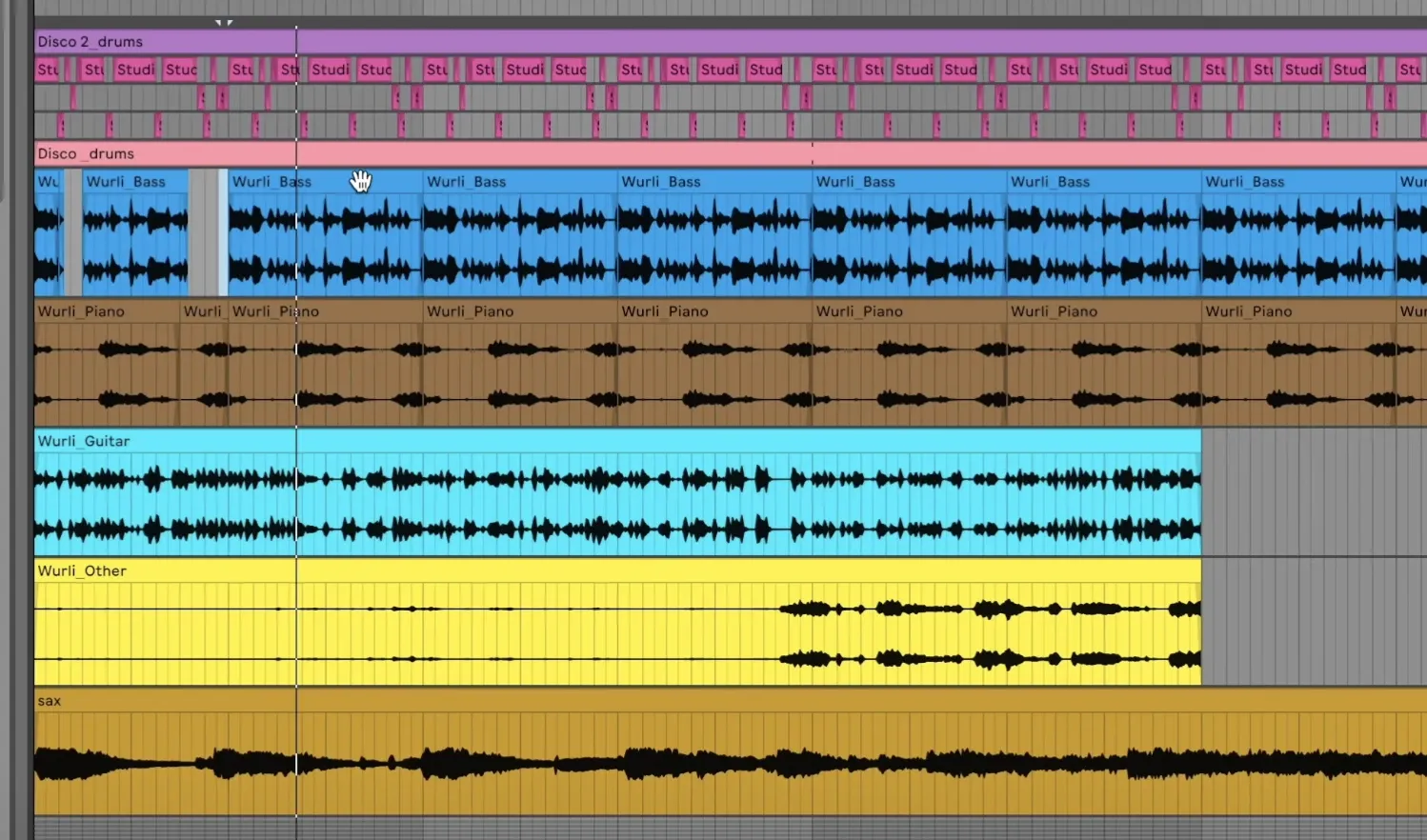

I generated another sample in Musicfy.lol using the prompt “Soulful Wurlitzer, Disco 1970s”, split it into its relevant parts in RipX and then imported them into Ableton. With these new stems, I was able to add a bassline, keyboard section and guitar riff.

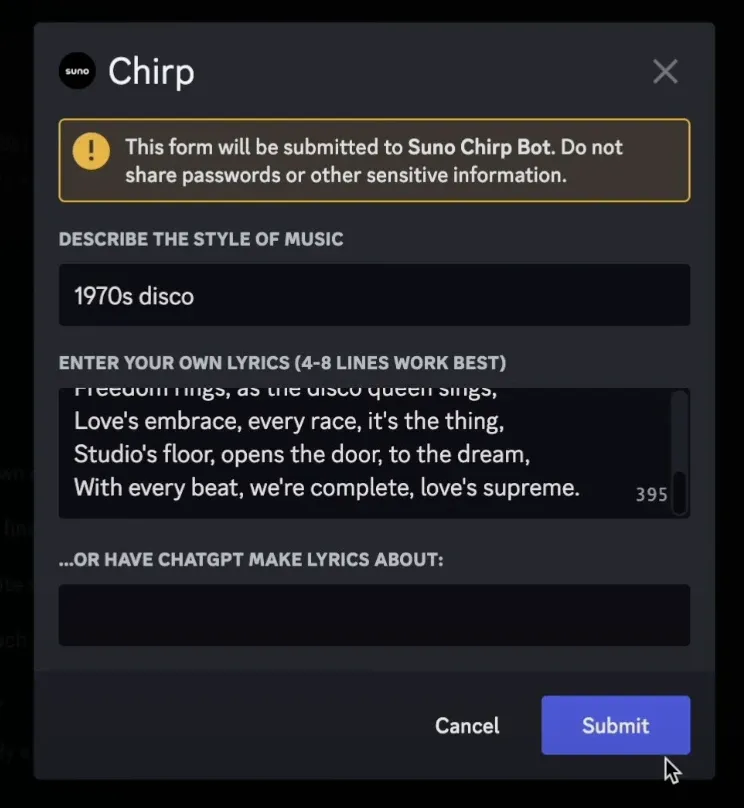

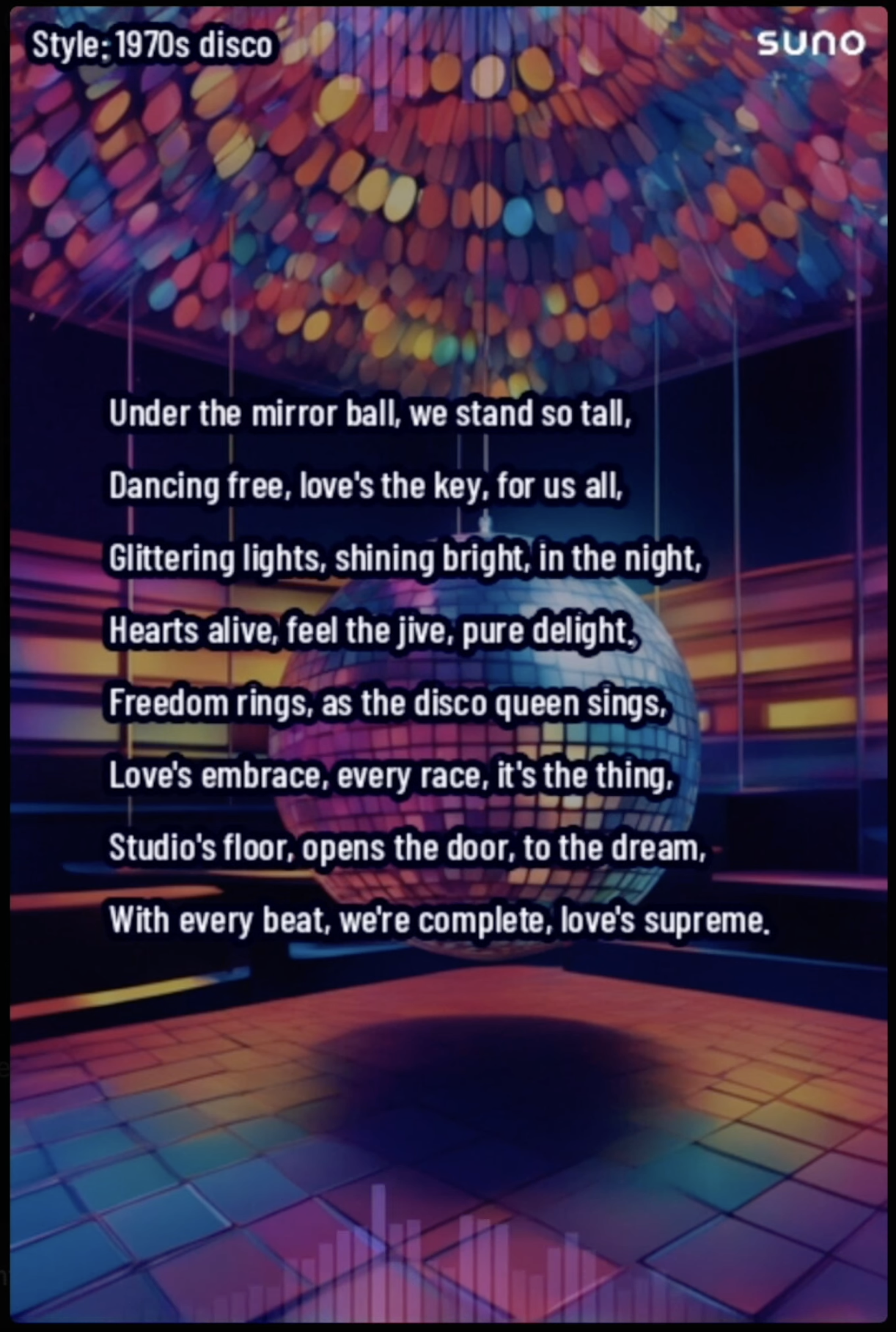

Adding Vocals and More Musical Elements:

To add some extra flavour to the track, I used Chirp—a full song generation algorithm developed by Suno AI—to generate some “1970s Disco”, this time with generated vocals singing lyrics written by ChatGPT. I downloaded the two generations, split them in RipX and incorporated parts of them into the arrangement, ending up with some extra drum layers, key and guitar parts, and most excitingly—some vocals.

I tried to use Musicfy.lol to generate a saxophone solo in C minor but found that it was incapable of keeping the track's key in mind. I brought it into the project and easily adjusted the pitch to make it fit though, so it wasn't a big problem. Furthermore, I listened back with all of the elements turned on and the layered vocals, saxophone, and other musical elements blended seamlessly to create a soulful and authentic disco vibe.

Final Touches:

Throughout the production process, I made various adjustments to create space with rests and highlight specific elements by making parts quieter or louder. I chopped up parts of the guitar, bass, and keys to create dynamic sections and added some panning effects to enhance the stereo field. This is important because the AI tools all generate audio in mono, so some simple panning can add a lot of depth. By fine-tuning the arrangement and mixing the stems, I achieved a polished and lively sound.

Conclusion:

Using artificial intelligence in music production offers limitless possibilities and adds a fresh dimension to the creative process. With the ability to generate unique sounds and separate audio into stems, AI opens up new avenues for experimentation and innovation. With just these early tools, sampling has taken on a whole new meaning. Instead of digging through old records and cassettes, we're “digging” through prompts to generate unique pieces of audio to inspire our next song with. These tools allow producers like me to explore new genres and styles, while still maintaining a personal touch. It's such an exciting evolution, merging creativity with technology in the most harmonious way. I am excited to continue exploring the potential of AI in my music production journey, and I hope you found this blog post inspiring and informative. Until next time, happy creating!